DataSource Chat AI

A Chat AI is an artificial intelligence system designed to engage in conversations with users through natural language. It leverages techniques such as natural language processing (NLP) and machine learning to understand, process, and generate human-like responses in real-time, enabling tasks like answering questions, providing recommendations, or holding interactive dialogues. Popular examples include virtual assistants and AI-driven customer support chatbots.

Supported models and sub-models

- OpenAI

- GPT-3.5 Turbo

- Groq

- Meta Llama 3 70B

Engaging in Practical Implementation

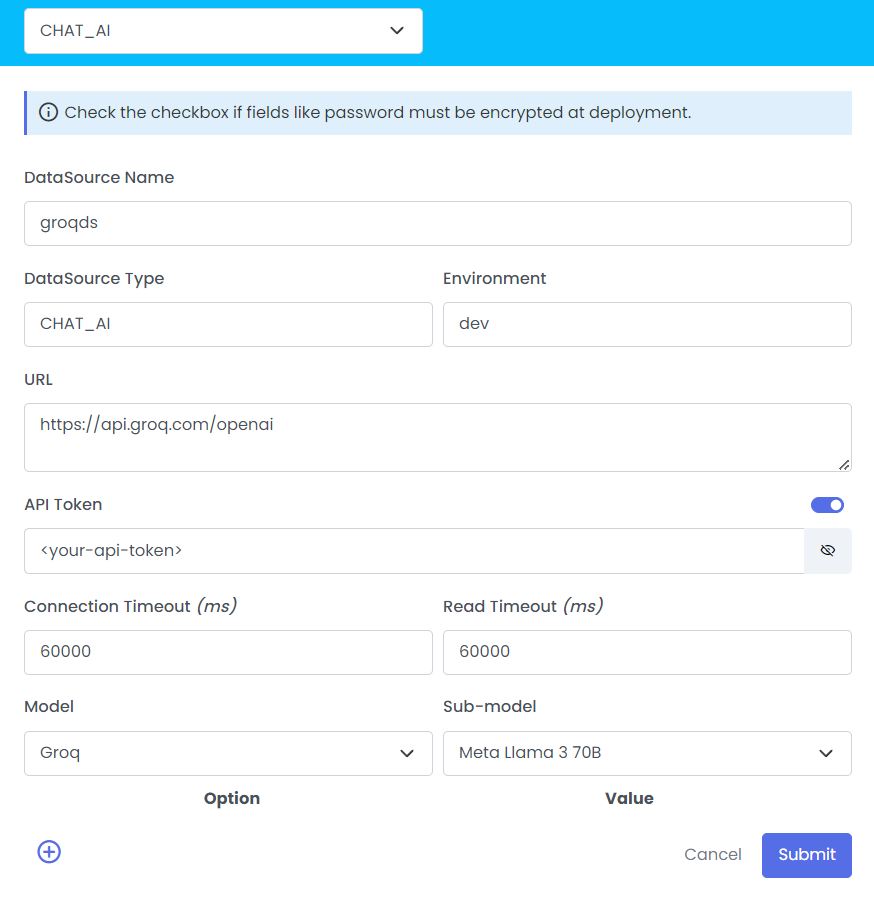

Open new Project in QuickIntegration Platform, and then follow these steps to get your flow working

- Click On the Connection Properties

- Select the DataSource Type from drop down

- Provide the Credentials

- Click on Submit to save the Credentials

- On the left side of the palette, you'll find the Configured Properties ready to be utilized within your API.

| Fields | Description | Example |

|---|---|---|

| DataSource Name | Datasource Name which is configured in connections properties | groqds |

| DataSource Type | CHAT_AI | CHAT_AI |

| Environment | Provides a production environment where you can deploy applications and APIs publicly | dev |

| URL | A URL (Uniform Resource Locator) is a unique identifier used to locate a resource on the Internet | https://api.groq.com/openai |

| API Token | An API token is a unique identifier used to authenticate and authorize access to an API, ensuring secure communication between a client and the server. | <your-api-token> |

| Connection Timeout | The maximum amount of time the driver will wait while attempting to establish a connection to the database. | 60000 (ms) |

| Read Timeout | The maximum amount of time the client will wait for a response from the server after a connection is established | 60000 (ms) |

| Model | A model in the context of machine learning is an algorithm or mathematical structure that processes input data, identifies patterns, and makes predictions or decisions based on that data. It is trained using data to learn relationships and rules, which it then applies to new, unseen inputs. | Groq |

| Sub-model | A sub-model in machine learning is a smaller or specialized component of a larger model, designed to handle a specific task or subset of the overall problem. It works in conjunction with other sub-models or the main model to contribute to the final prediction or decision-making process. | Meta Llama 3 70B |

| Options | Description | Example |

|---|---|---|

| Log Probability | The log probability of a token is the logarithm of the probability assigned to that token. This is useful for numerical stability and for comparing relative probabilities, as it avoids issues with very small probabilities. | true |

| Temperature | The sampling temperature to use that controls the apparent creativity of generated completions. Higher values will make output more random while lower values will make results more focused and deterministic. It is not recommended to modify temperature and top_p for the same completions request as the interaction of these two settings is difficult to predict. | 0.8 |

| Frequency Penalty | Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model’s likelihood to repeat the same line verbatim. | 0.0 |

| Max Tokens | The maximum number of tokens to generate in the chat completion. The total length of input tokens and generated tokens is limited by the model’s context length. | 4096 |

| User | A unique identifier representing your end-user, which can help OpenAI to monitor and detect abuse. | - |